Digital Assistants, Facebook Quizzes, And Fake News! You Won’t Believe What Happens Next

This is the talk I gave at DIBI Conference in Edinburgh in March 2017. Many people asked me for my slides, but I wanted to include them in context, with accessible links. This is roughly how it went:

Performance!

Nowadays performance seems to be the hot topic of the web industry. So let’s have a look at how we might improve the speed of a web page.

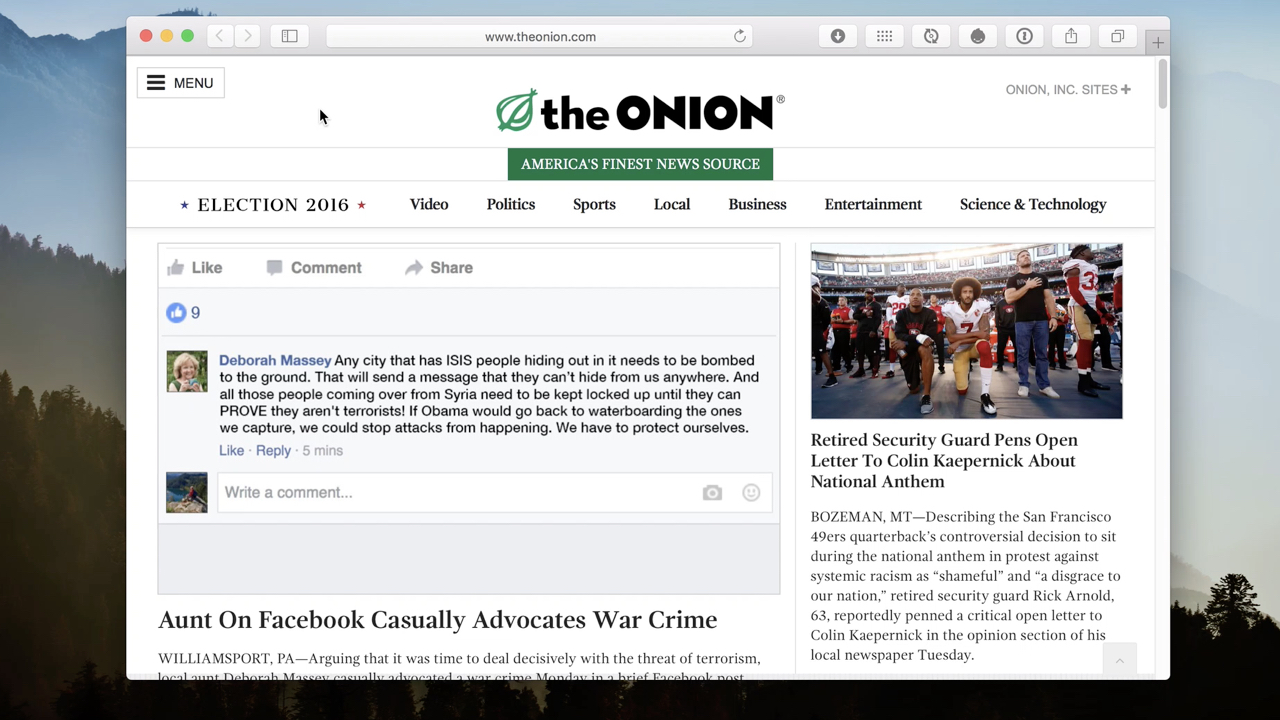

theonion.com

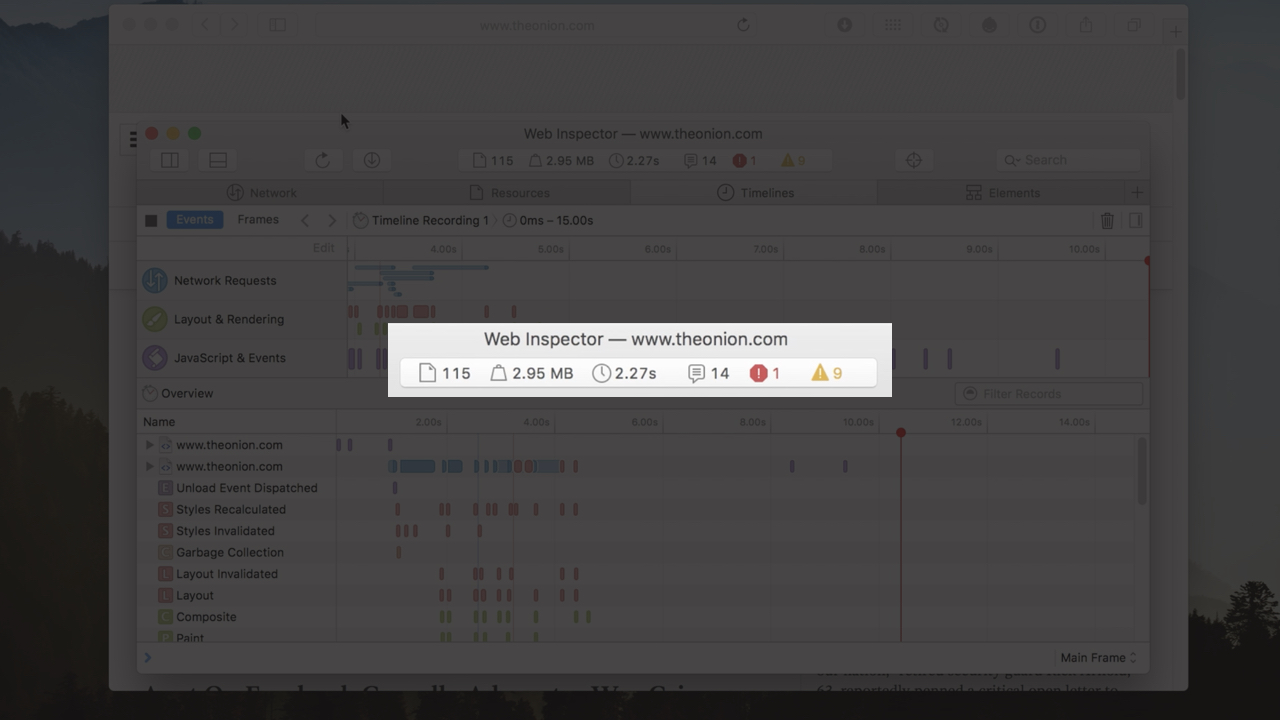

Looking at the Onion homepage, you can see it’s loading lots of images and a few videos. It’s mostly images and text content. There’s a big sticky ad at the bottom of the viewport that takes a while to load.

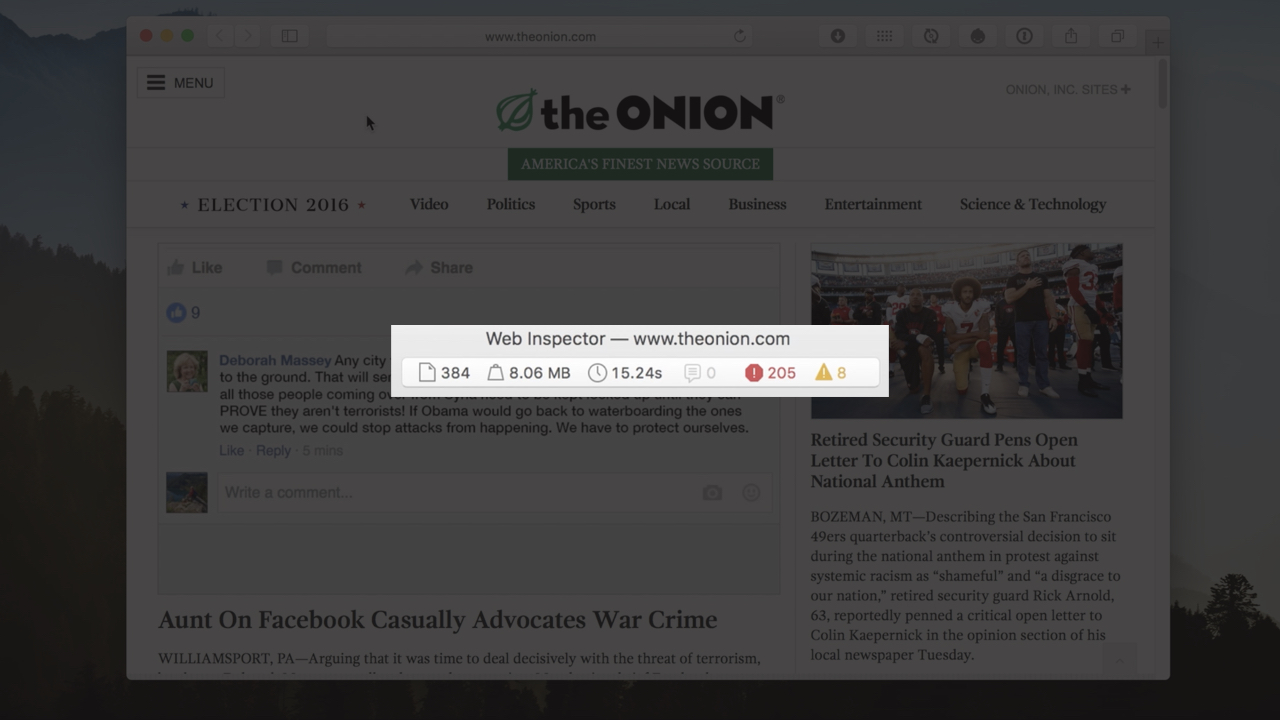

If you look at what’s loading behind the scenes, you can see a the layout being rendered, loading in all the content first, then more and more requests as new stuff is being loaded in. All in all, they’re loading 384 resources, coming in at 8MB, taking 15.24 seconds minimum to load. (There’s also 205 errors which may well be impacting that load time.)

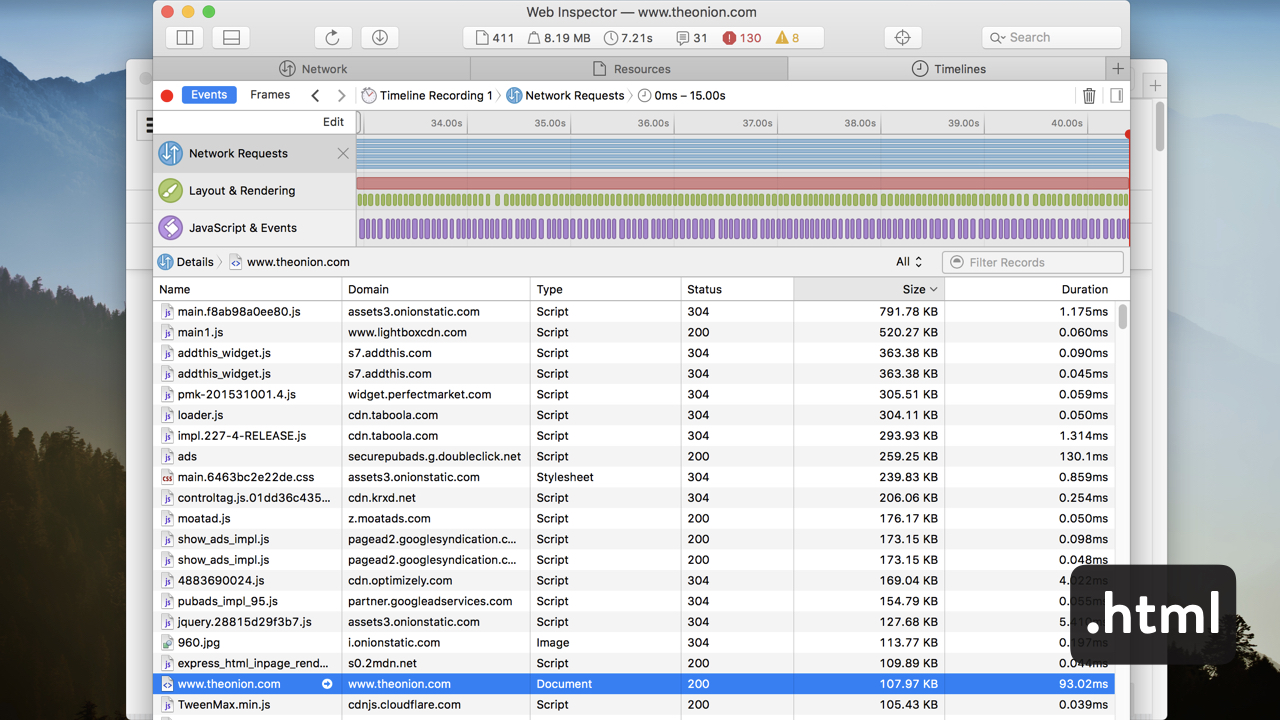

If we look at Safari’s Network Requests tab, we can see a little more of what’s being loaded in. If we look at the requests by size:

- .html the primary HTML document is only the 19th largest file being loaded

- The largest file is .js, a biggish javascript file, that’s fairly standard

- The next .js file is a lightbox script, there’s an external CDN for fast loading, that’s fine.

- .css is CSS, which is very important!

- .js is jQuery, fine if you must…

- .jpg is a big picture of houses

But then there is also…

- addthis.com AddThis social buttons

- addthis.com more AddThis

- perfectmarket.com Perfect Market

- taboola.com Taboola

- taboola.com Taboola again

- doubleclick.net Google DoubleClick

- krxd.net Krux

- moatads.com Moat Ads

- googlesyndication.com Google Syndication

- googlesyndication.com Google Syndication again

- optimizely.com Optimizely

- googleadservices.com Google Ad Services

- 2mdn.net which I know is another domain for Google DoubleClick.

I could go on for other 408 requests but I’d be here for a week. These are all scripts for tracking people in one way or another.

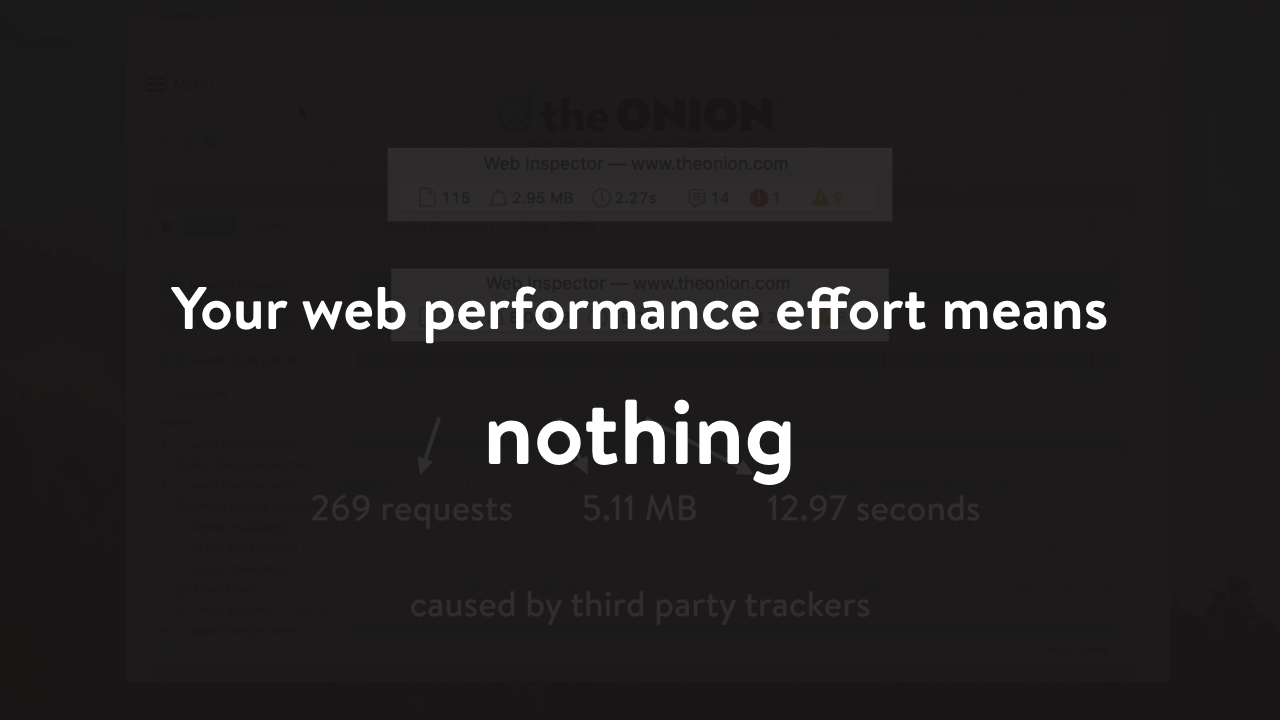

What would happen if we just load the main page without any third party tracking scripts? Let’s look again at that timeline, but this time with those third party tracking scripts blocked:

The Onion now has 115 requests, coming in at 2.95MB, loading in just 2.27 seconds.

Comparing the before and after, that’s 269 requests, 5.11MB and 12.97 seconds caused by third party trackers.

You know what that means? Your web performance effort counts for little to nothing if your organisation’s business model requires a gazillion tracking scripts to make money.

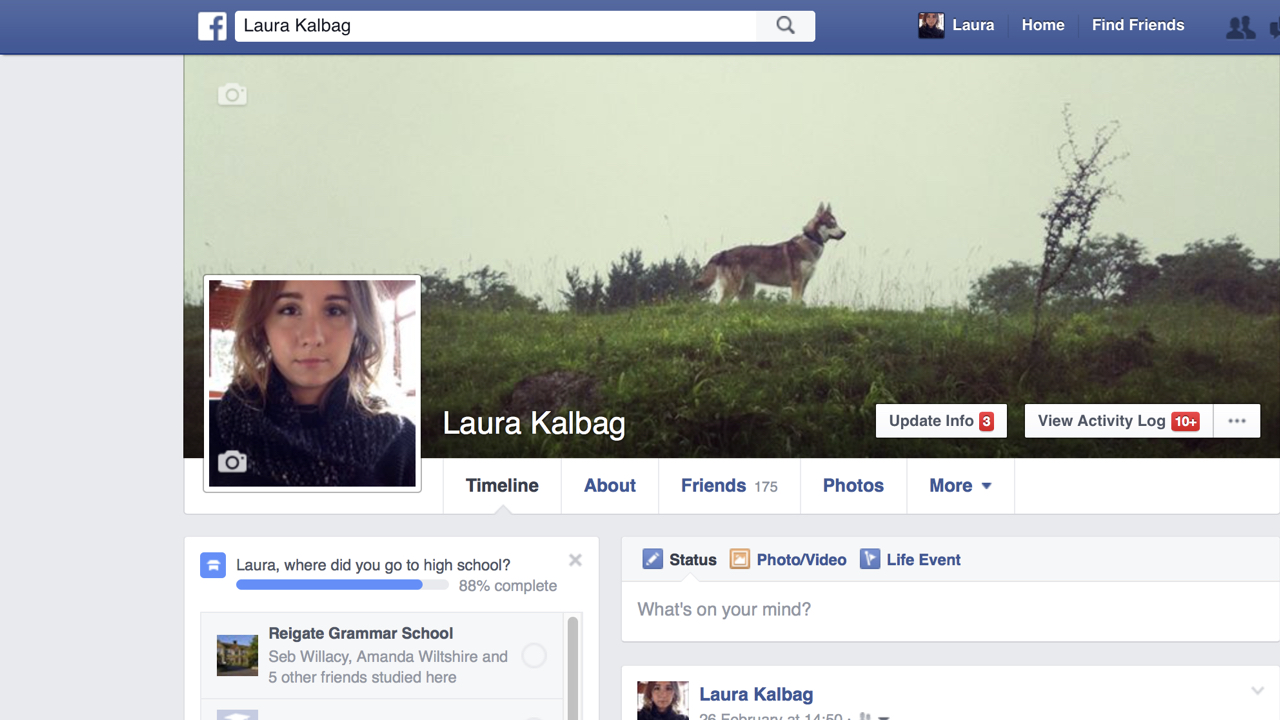

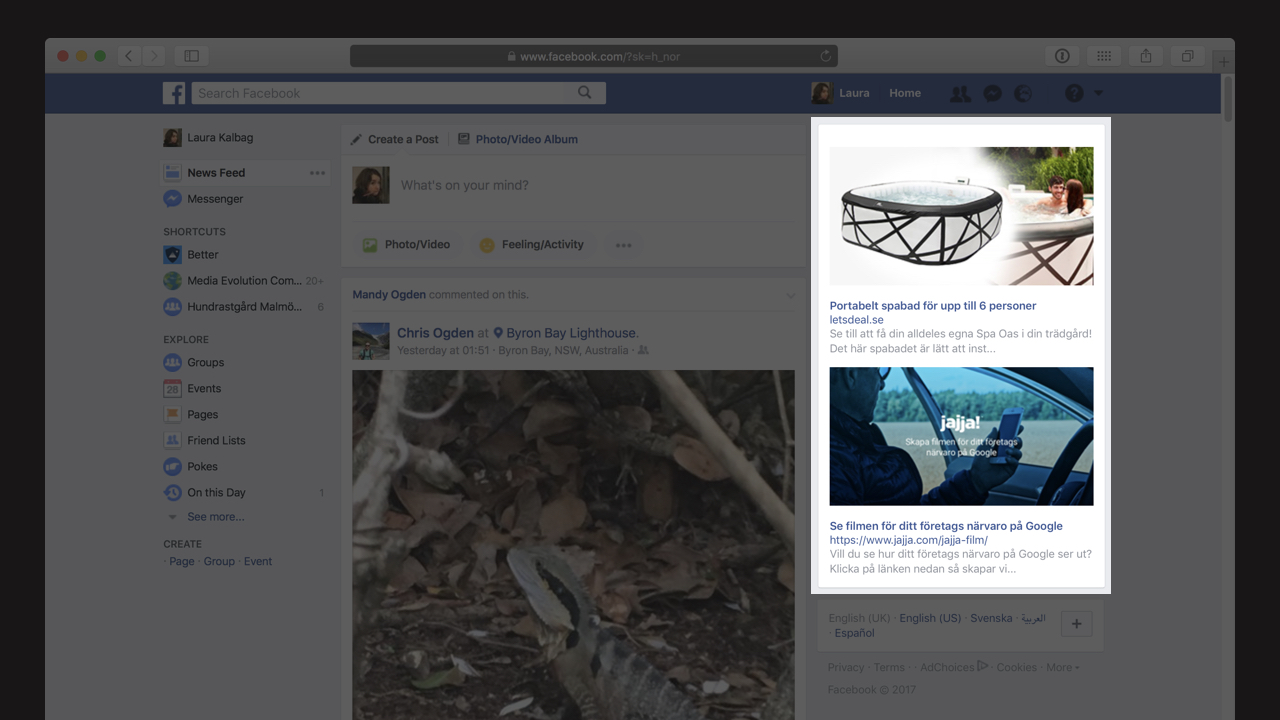

Me on Facebook

Let’s look at this phenomenon from the perspective of the people browsing the web. We feel like we know Facebook’s game. They show us adverts, and we get to socialise for free. Simple as that?

I don’t give Facebook much info, I get ads for the average 30 year old woman: washing liquid, shampoo, makeup, dresses, more dresses, and sometimes a wild card ad for pregnancy tests. But what happens when Facebook gets a bit more information?

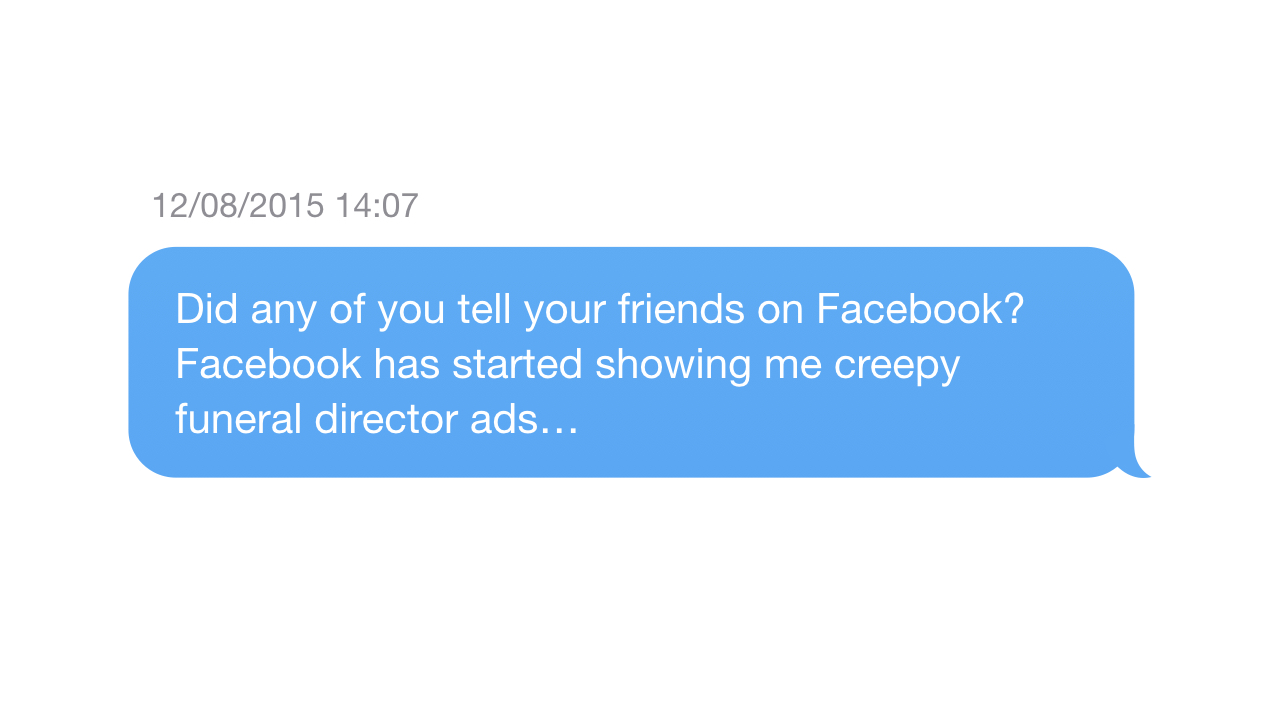

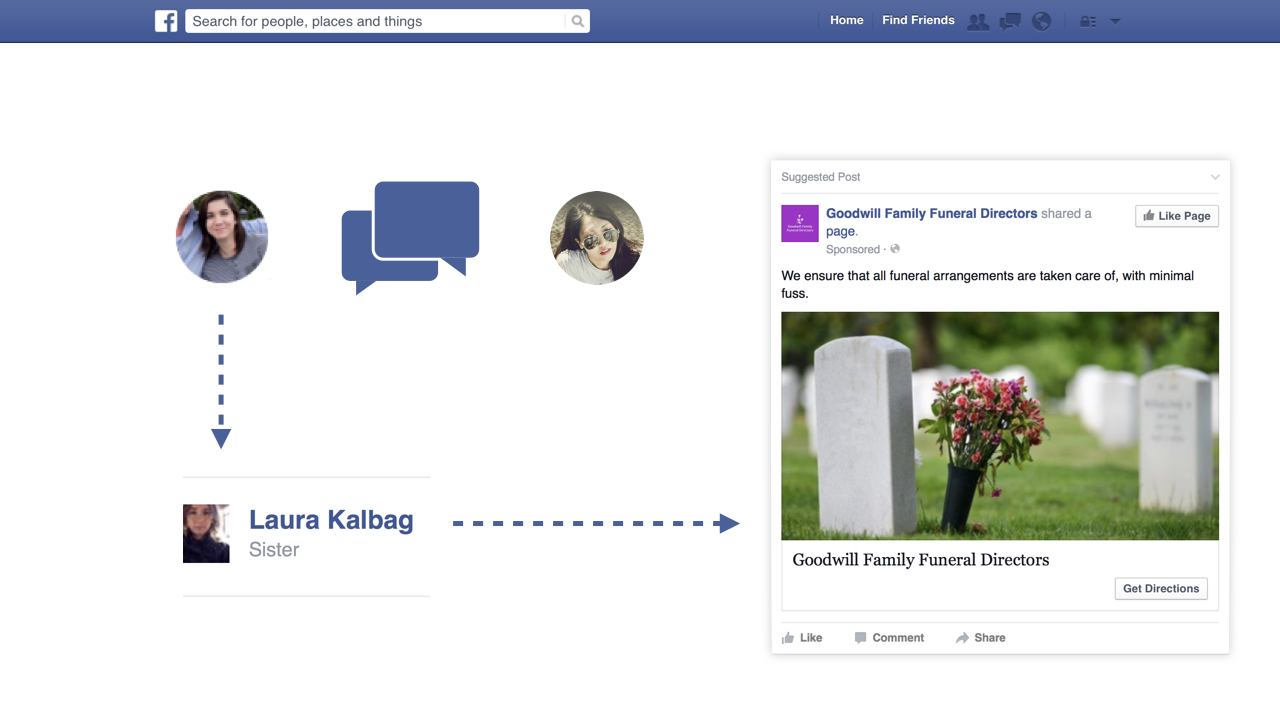

Nearly two years ago, my mother died. We didn’t want any of our friends and family finding out from a poorly thought-out tweet or Facebook post… so we didn’t post anything on social media. We rang people to let them know. And then Facebook suggested I might be interested in… Goodwill Family Funeral Directors.

Surely just a coincidence?

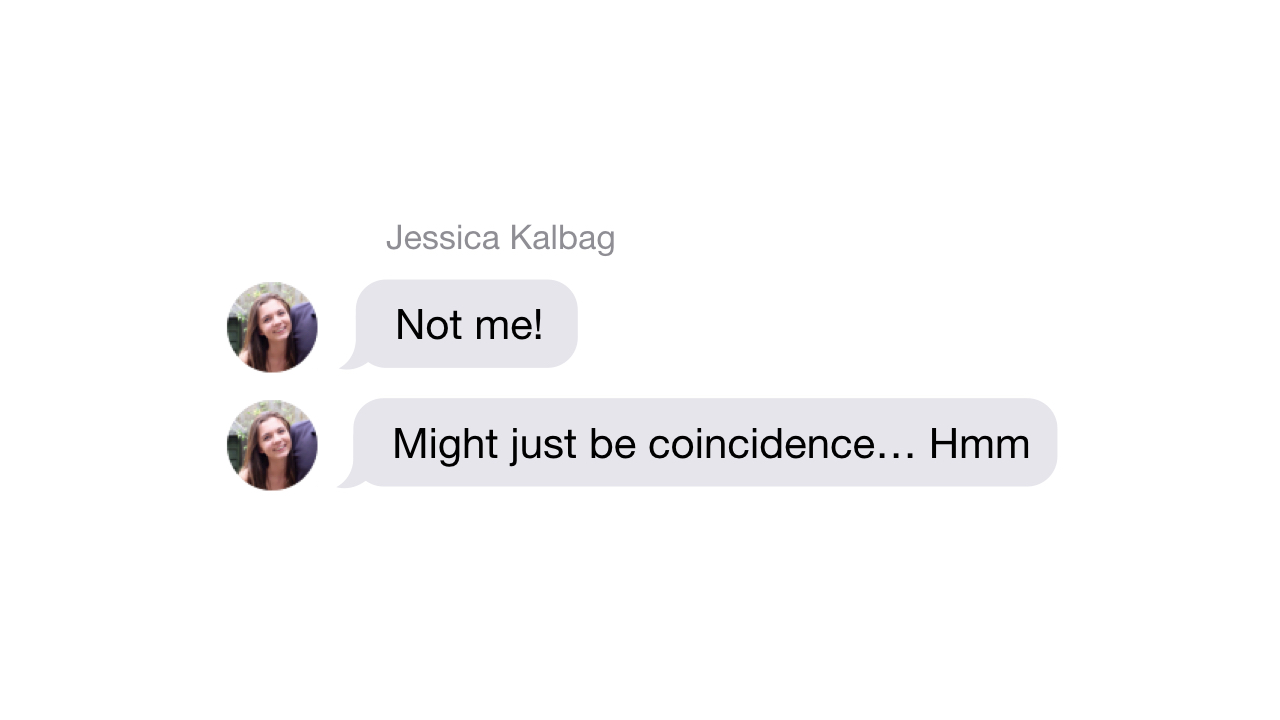

So I asked my sisters and brother if any of them had posted something on Facebook…

Well it might just be a strange coincidence, but maybe too close to be a coincidence. My sister had told her friend via private message. She’d used some key words in a Facebook message to her friend Maddy, Facebook may have made the connection that I’m her sister, figured out that I might want a Funeral Director and stuck that ad in my feed. It just goes to show how much Facebook knows, and how much complexity it can grasp, despite my not telling it anything.

How do they know?

But how else could Facebook have known?

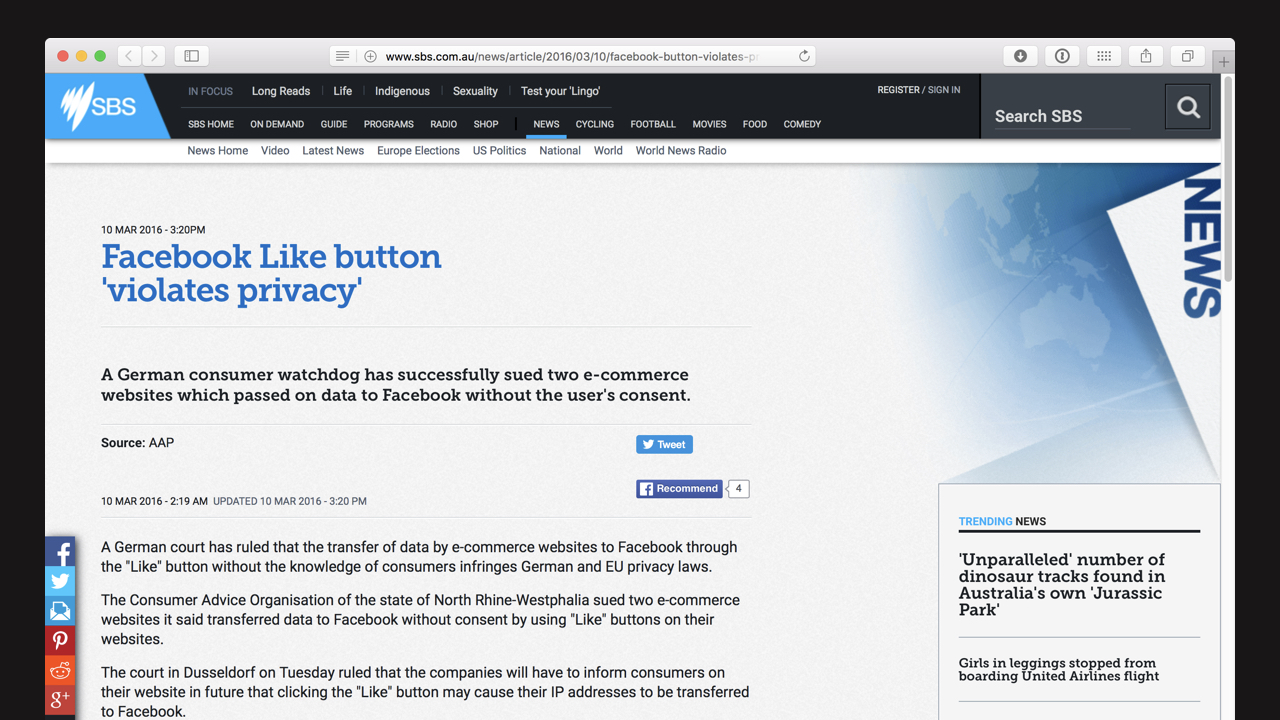

If I was browsing funeral-related sites, maybe Facebook would have that information if those sites had Facebook Share Buttons or Facebook login. As this article in SBS News points out, those Facebook buttons track you across the web, violating your privacy. (Also note the hypocrisy of this news site using multiple Facebook buttons on this article.)

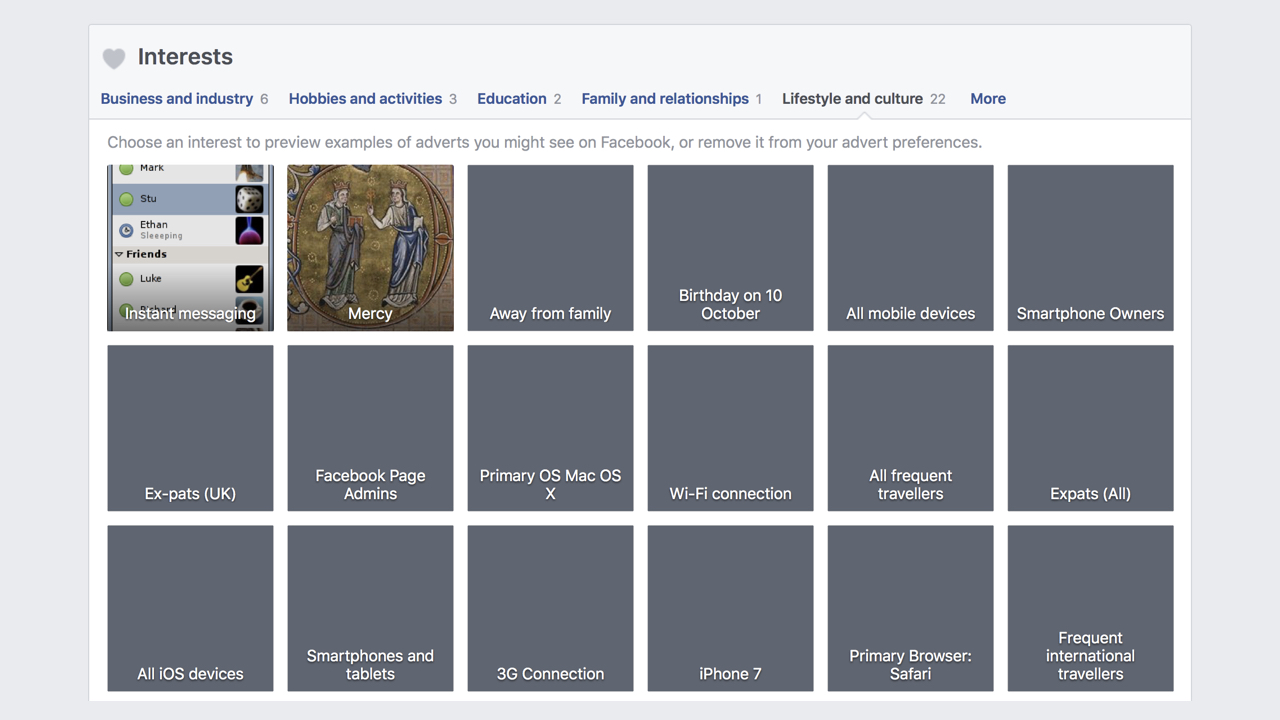

You can find out some of the things Facebook knows about you in your Ad Preferences.

But as ProPublica point out, Facebook doesn’t tell its users everything it really knows about them:

“What the [Facebook ads] page doesn’t say is that those sources include detailed dossiers obtained from commercial data brokers about users’ online lives. Nor does Facebook show users any of the often remarkably detailed information it gets from those brokers.”—Julia Angwin, Terry Parris Jr.and Surya Mattu, December 2016

I’ll come back to data brokers later.

“The fundamental purpose of most people at Facebook working on data is to influence and alter people’s moods and behaviour.They are doing it all the time to make you like stories more, to click on more ads, to spend more time on the site.” A data scientist who previously worked at Facebook

If your first thought is “but isn’t that what we’re all trying to do”, you need to watch some sci-fi. The Nosedive episode from Black Mirror series 3 may seem far-fetched, but we are already participating in this ranking. It’s just not visible to us.

“But you don’t have to be on Facebook!” We hear this all the time from people who have never joined Facebook, have no intention of leaving Facebook, who don’t socialise, or who are generally pedantic and tedious. My answer is: NOPE.

You can’t just leave Facebook

You can’t just leave Facebook. First of all, you’d end up severing social ties, miss out on events and other social interactions that people limit to Facebook. But even if you do leave, or if you never joined, Facebook has a shadow profile on you.

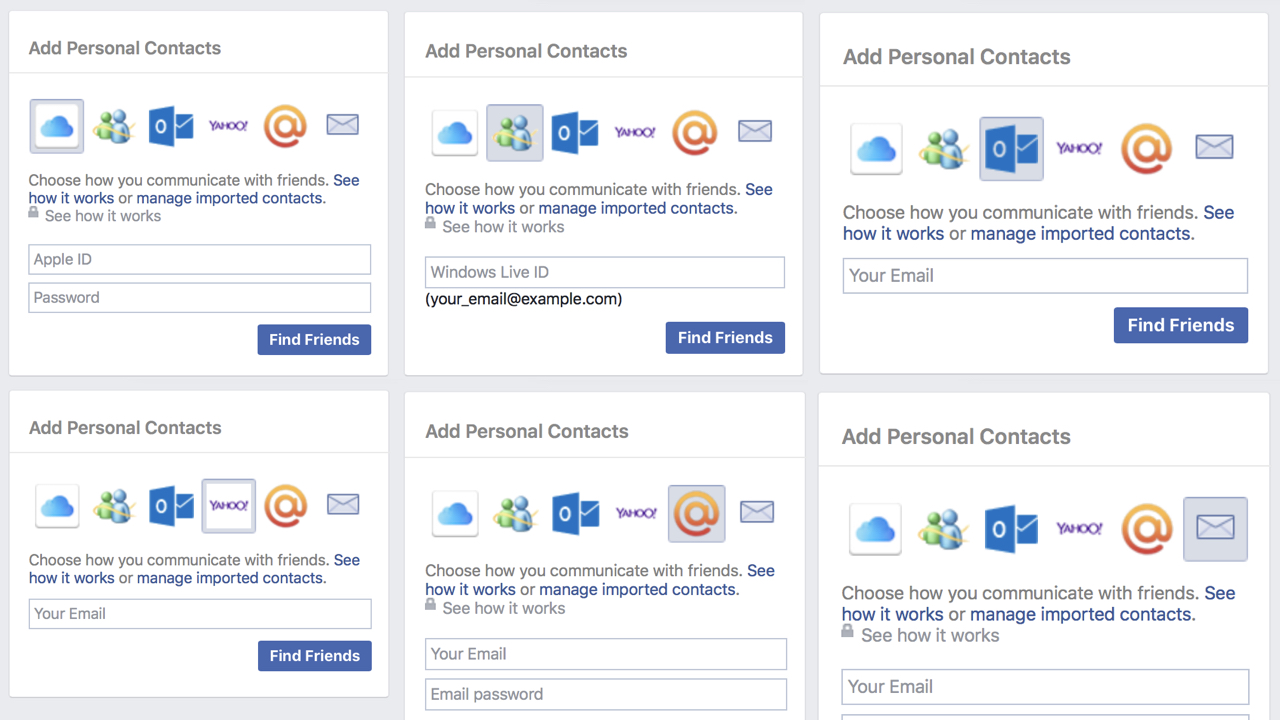

If a friend or acquaintance has used the Find Friends functionality, or used Facebook Messenger on their phone, they gave Facebook access to their Contacts. Facebook uses those names, email addresses, and phone numbers to build their shadow profiles.

The following quote is nearly four years old:

“Right now commenters across the Internet will be saying, Don’t join Facebook or Delete your account. But it appears that we’re subject to Facebook’s shadow profiles whether or not we choose to participate.

I feel like we’re only beginning to understand why Facebook’s data is so very valuable to advertisers, governments, app makers and malicious entities.”—Violet Blue, Zero Day. June 2013

Facebook isn’t alone

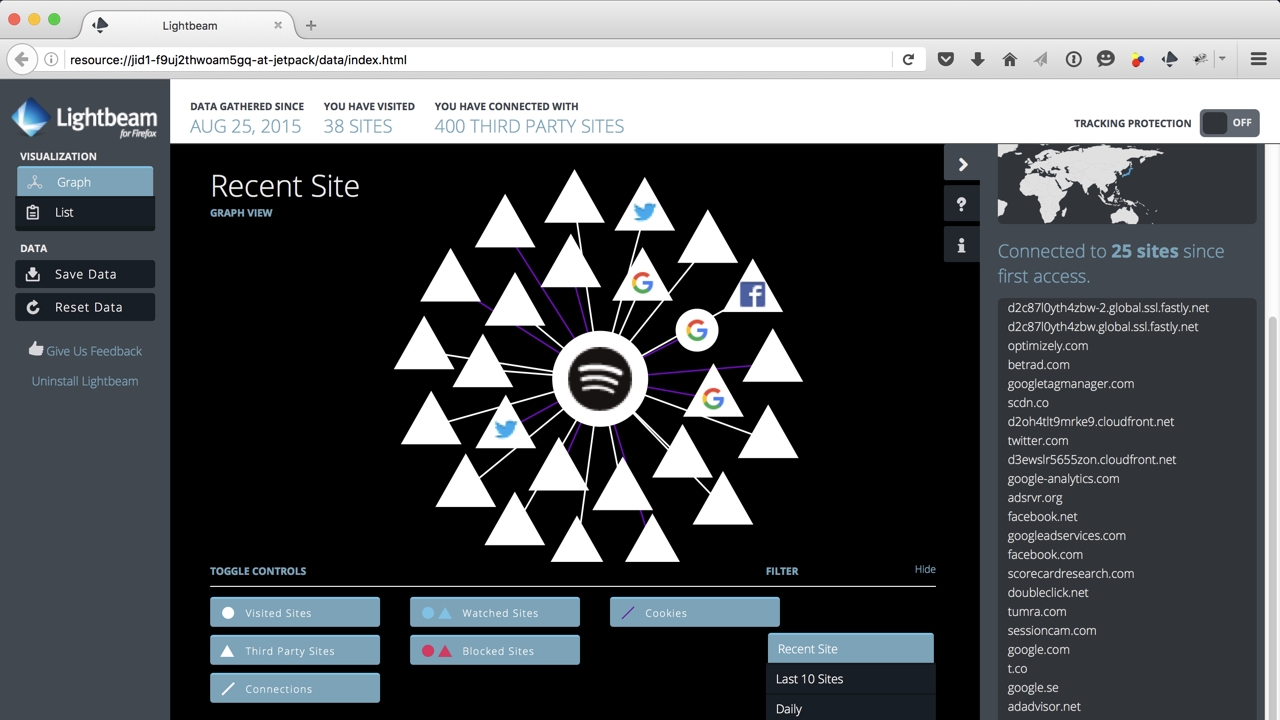

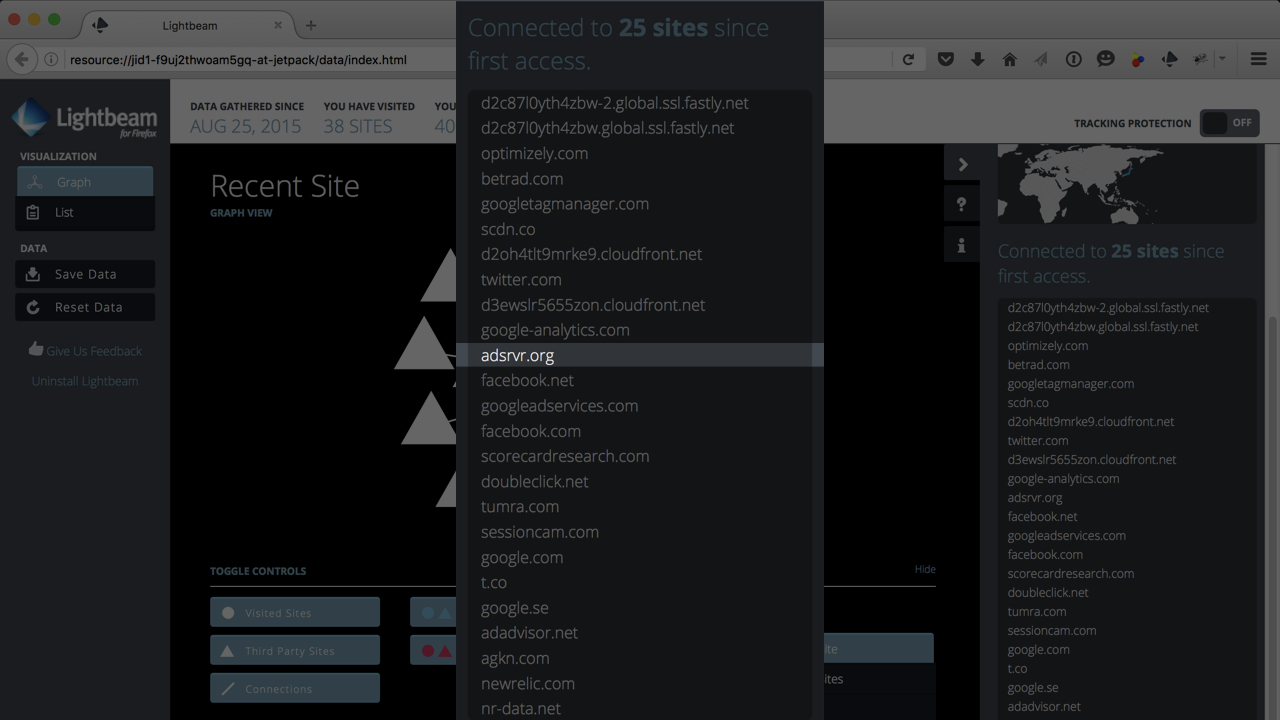

Facebook isn’t the only place this happens. If I go to the Spotify website, just to download Spotify, using Firefox and their Lightbeam extension. (Lightbeam is a Firefox add-on. It shows you who is tracking you via third-party scripts.) Lightbeam shows me that Spotify sends my data to 25 sites.

Let’s have a closer look at these sites… there are a few that are familiar to developers…

- Google Analytics, we know that’s analytics

- Twitter and Facebook, possibly some kind of sharing buttons (but also possibly not)

But that leaves 21 more mysterious scripts… Let’s have a close look at one of those sites…

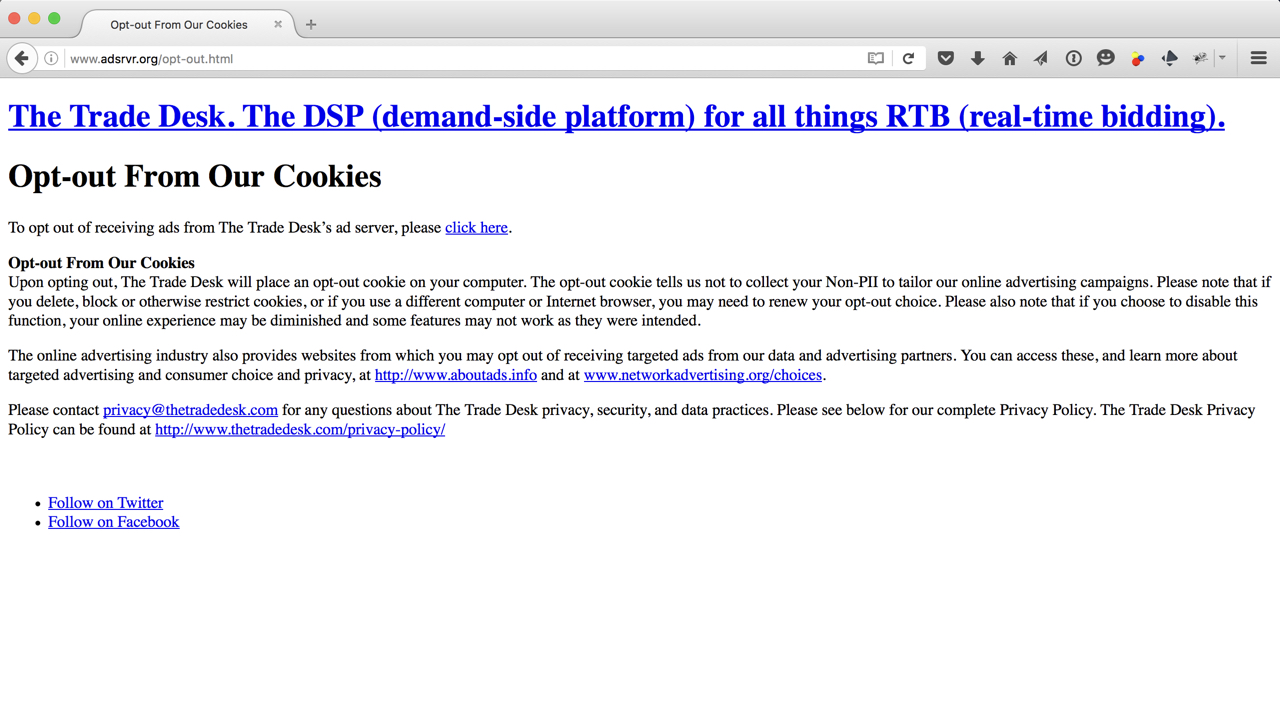

What is adsrvr.org?

A quick search of adsrvr.org brings me to an opt-out page which shows me this site belongs to the The Trade Desk. The Trade Desk is apparently: “true buying power” and “omnichannel buying capabilities and industry –; leading tech” Whatever that means.

At Ind.ie, we’ve been doing a lot of research into trackers lately, and here’s a top tip: always check out the privacy policy. Most tracking companies are the clearest about their agendas in their privacy policies.

“The Trade Desk Technology allows our Clients to buy ad space on websites for online advertising and allows for the use of proprietary and third party data in the purchase of that media.”—The Trade Desk Privacy Policy

“Our Technology collects Non-Personally Identifiable Information(“Non-PII”) that may include, but is not limited to…

- Your IP host address,

- the date and time of the ad request,

- pages viewed,

- browser type,

- the referring URL,

- Internet Service Provider,

- and your computer’s operating system,”

That’s a lot of your information going into the cloud. But did you notice the caveat? Non-personally identifiable information.

Non-personally identifiable information

“Such as your IP host address, age, gender, income, education, interests and usage activity…”The Trade Desk Privacy Policy

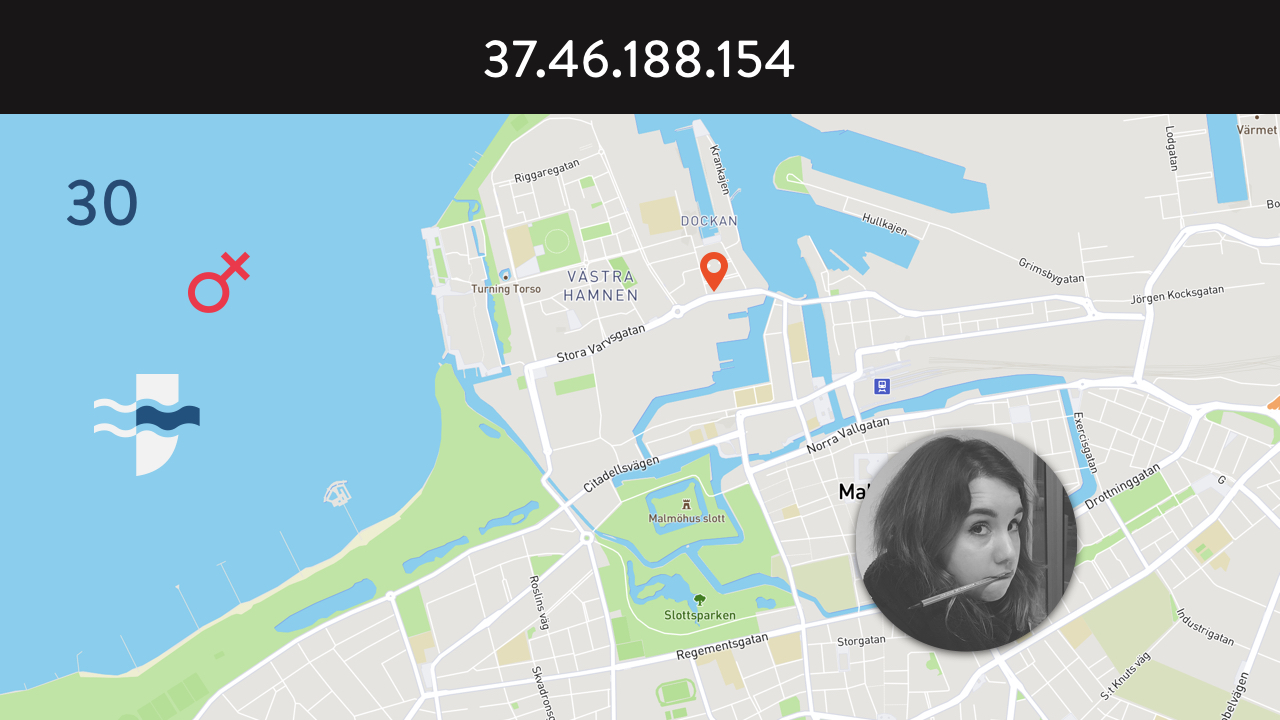

Take my IP address where I work in Malmö… it can pinpoint me to exactly where I am 25% of the time. And then if you add in my age, gender and education (which is all easily available on Facebook, Linkedin, etc)… How many 30 year old women who studied in Somerset in the UK are working in that building in Malmö 800 miles away? It’s definitely this idiot.

But they “don’t share it.” “It’s non-personally identifiable information.” But how true is that really? In ‘Why “Anonymous” Data Sometimes Isn’t’, Bruce Schneier, a cryptographer, computer security and privacy expert says:

“it takes only a small named database for someone to pry the anonymity off a much larger anonymous database”— Bruce Schneier.

With more than one dataset, and the right algorithm, nearly any dataset can be de-anonymised. An example of these databases could be Sweden’s hitta.se, or the UK’s electoral roll. With these publicly-available databases, any or all of this data could be connected to me as a person.

This means many of these third parties can work out a lot more about me and my habits, but surely they don’t know me as…a person?

“Facebook knows you better than your members of your own family”—Sarah Knapton, Science Editor, Daily Telegraph.January 2015

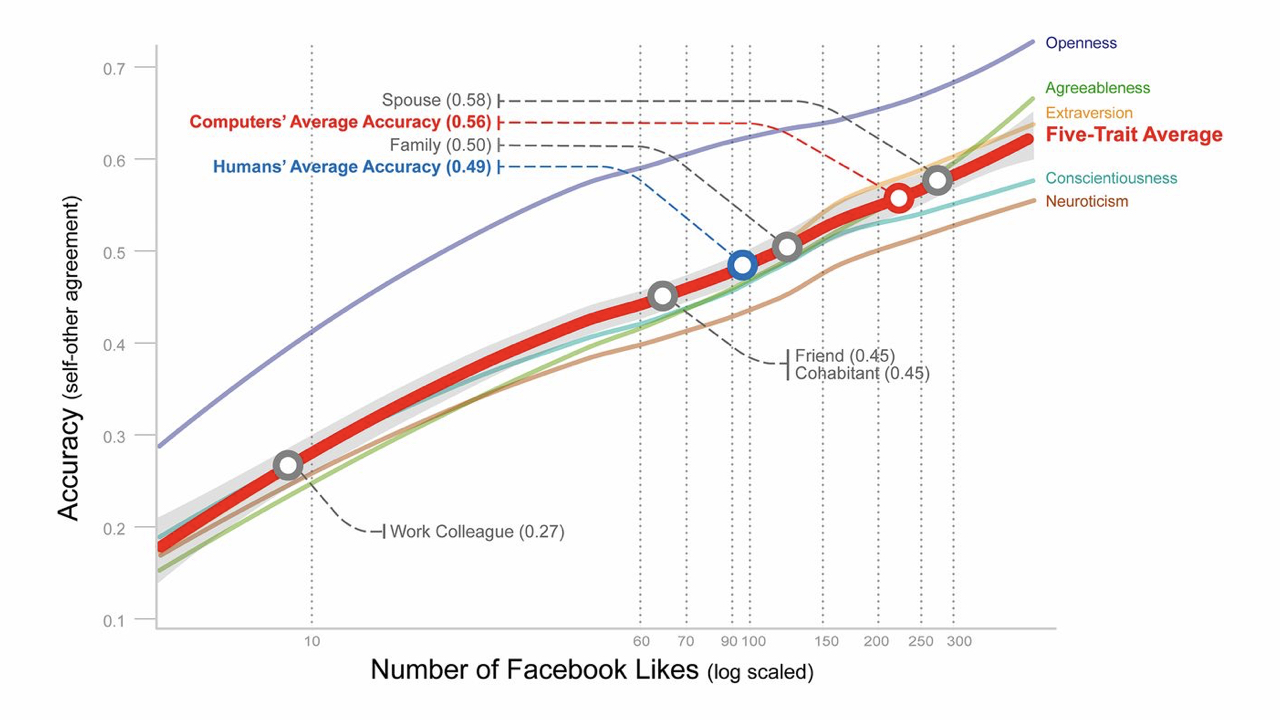

Psychologists and a Computer Scientist from the Universities of Cambridge and Stanford discovered that computer-based personality judgments are more accurate than those made by humans.

“The team found that their software was able to predict a study participant’s personality more accurately than a work colleague by analysing just 10 ‘Likes’.”—Sarah Knapton, Science Editor, Daily Telegraph. January 2015

“Inputting 70 ‘Likes’ allowed it to obtain a truer picture of someone’s character than a friend or room-mate, while 150 ‘Likes’ outperformed a parent, sibling or partners.”—Sarah Knapton, Science Editor, Daily Telegraph. January 2015

“It took 300 ‘Likes’ before the programme was able to judge character better than a spouse.”—Sarah Knapton, Science Editor, Daily Telegraph. January 2015

With the introduction of Facebook reactions, there’s even more Facebook can know about you because they have more specific data. Police in Belgium have even warned people about using Facebook reactions…

“Belgian police now says that the site [Facebook] is using them as a way of collecting information about people and deciding how best to advertise to them. As such, it has warned people that they should avoid using the buttons if they want to preserve their privacy.”—The Independent

“By limiting the number of icons to six, Facebook is counting on you to express your thoughts more easily so that the algorithms that run in the background are more effective,” the post continues. “By mouse clicks you can let them know what makes you happy.”—The Independent

That is real information from the Belgian police. How many things have you liked on Facebook? Do you use the other reactions too?

Welcome to the world of the Data Broker

And this is why data brokers exist. They are corporations whose business it is to collect and combine data sets:

“LexisNexis helps uncover the information that commercial organizations, government agencies and nonprofits need to get a complete picture of individuals, businesses and assets…”

“Only Acxiom connects people across channels, time and name change at scale by linking our vast repository of offline data to the online environment”

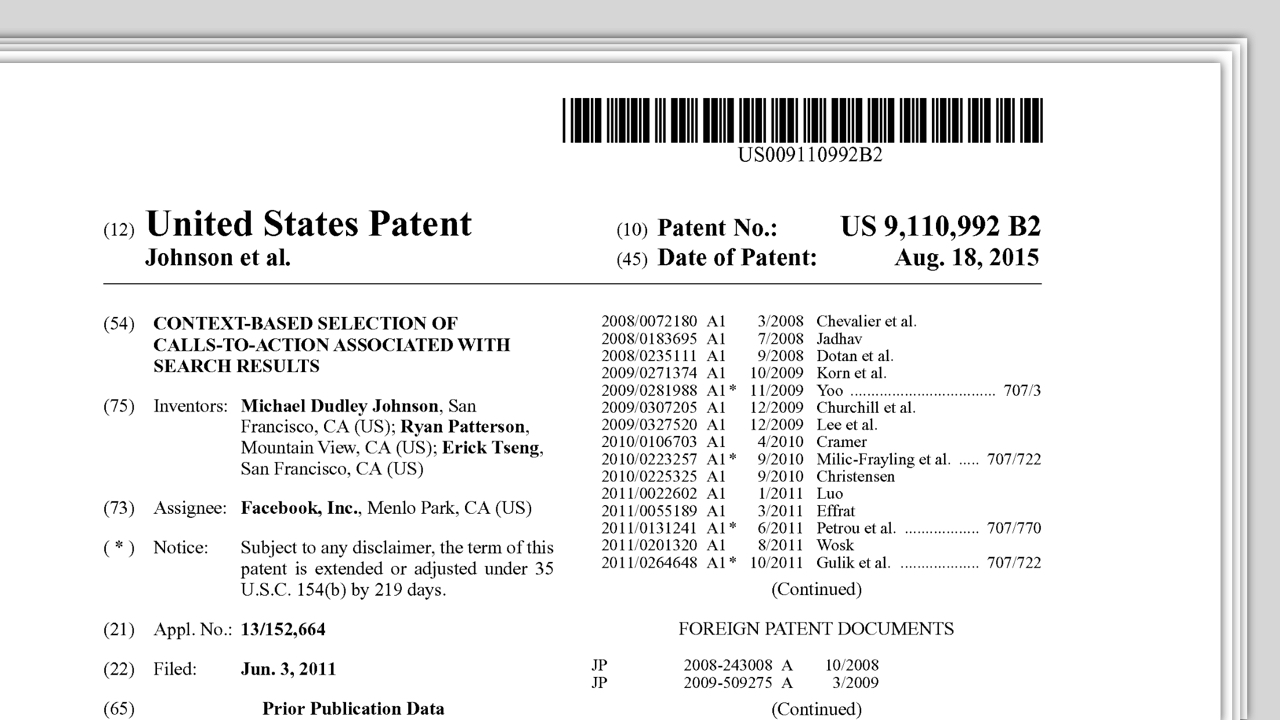

It’s a big money business, and the different ways to monetise you are endless. For example, a couple of years ago, Facebook was granted a patent:

“When an individual applies for a loan, the lender ” examines the credit ratings of members of the individual’s social network who are connected to the individual through authorized nodes. If the average credit rating of these members is at least a minimum credit score, the lender continues to process the loan application. Otherwise, the loan application is rejected.”

Yes, that means what you think it does. It means they want to approve loans based on the financial records of your Facebook friends. And would that make you think about your friends in a different light? What about when your friend unfriends you on Facebook because you’re too poor?

Governments and corporations

Privacy advocates have long worried about the data that is collected by corporations getting into the hands of unfriendly governments. With the political situation we find ourselves in now, those privacy advocates are unfortunately being proved right:

Border controls across the world using social media to discriminate against travellers, even demanding those under scrutiny enter their password to allow authorities access to their accounts. Of course, this disproportionately affects people of colour and other victims of bigoted profiling. People arrested during protests on Trump’s Inauguration Day had investigators requesting their data from Facebook to help incriminate them. And anti-choice groups have used behavioural advertising on phones to target women in healthcare clinics. Erin Kissane put it best in her post on how to “Be More Careful on Facebook” when she said:

“If you believe Facebook will keep your data safe and never let it be used against you or your most vulnerable contacts, by governmental or private entities, you’re putting your faith in an entity that has demonstrated bad faith for years.”—Erin Kissane, Be More Careful on Facebook, February 2017

Data grabbing is the dominant business model of mainstream technology

I may be spending an unequal amount of time focusing on Facebook when, grabbing your data is really the dominant business model of mainstream technology. I thought I’d insert here a list of products and services that collect your information without really needing it, but I realised it’d be unending. Instead, I’ve made a list of products whose information on you would probably make you a little uncomfortable…

- Google Nest, home security that will have a good look around your home

- Hello Barbie, a doll who your kids can talk to, recording all their responses, and sending it back to a multinational corporation

- Smart pacifier, in case you wanted to put a chip in your baby.

- Your medical records. Because it’s fine if Google has those, right?

- Looncup, a smart menstrual cup! It’s one of many smart things that women can put inside themselves. (Note that most of the internet of things companies in this genre are run by men…)

- We Connect’s smart dildo, which was found to be tracking its users’ habits

And have you ever wondered how many calories you’re burning during intercourse? How many thrusts? Speed of your thrusts? The duration of your sessions? Frequency? How many different positions you use in the period of a week, month or year? Then you want the iCondom.

That’s assuming you want all that information shared with advertisers, insurers, your government, and whoever else wants to buy it…

Needless to say, beware the Internet Of Things That Spy On You.

Learning about yourself ≠ corporations learning about you

Some of these products are really cool. I was an early adopter of fitness trackers. I love to know data about myself, and use that to encourage better habits. Learning about yourself does not have to mean that corporations learn about you too. None of these Internet Of Things products need to share your data back to their various clouds, or with any other parties, to be convenient to you. These are physical products. They have a clear option for a business model, they can be sold for money.

Not to mention, collecting information about people can be dangerous. Any organisation collecting data has to be able to keep it secure and safe from malicious parties. Glow, a pregnancy app, was discovered to have vulnerabilities making it easy for stalkers, online bullies, or identity thieves to use the information they gathered to harm Glow’s users.

Chilling Effect

Even if you’re aware that these products are spying on you, and you continue to use them, they can still affect you in adverse ways. They can create a “chilling effect.” A chilling effect is where a person may behave differently from how they would naturally, because of the legal and social implications of their actions.

From the other side, products with information about you are far more able to manipulate you. Products like digital assistants such as Amazon’s Alexa or Google Now…

“As the digital butler expands its role in our daily lives, it can alter our worldview. By crafting notes for us, and suggesting “likes” for other posts it wrote for other people, our personal assistant can e ectively manipulate us through this stimulation.—Maurice E. Stucke and Ariel Ezrachi, The Subtle Ways Your Digital Assistant Might Manipulate You, November 2016

Fake News!

I’ve got fake news in the title of this talk, but what does all of this have to do with fake news?

First of all we have to look at what constitutes fake news. Fake news is content presented as a news story but with no grounding in reality, let alone journalistic rigour. Fake news roughly fits into three categories:

1.Real issues exaggerated to distract you, such as issues that are exaggerated to distract the public. 2. Propaganda, weaponised speech delivered to achieve a political aim –; a mixture of truth, exaggeration and deception. 3. “Disinformatzya,” false content deliberately created to muddy the waters of informed public opinion, to make you distrust everything in the news

Adtech!

We have to ask why have all of these forms of not-news dressed up as news become popular? The answer is adtech:

“This form of digital advertising [adtech] has turned into a massive industry, driven by an assumption that the best advertising is also the most targeted, the most real-time, the most data-driven, the most personal”—Doc Searls, Brands need to fire adtech, March 2017

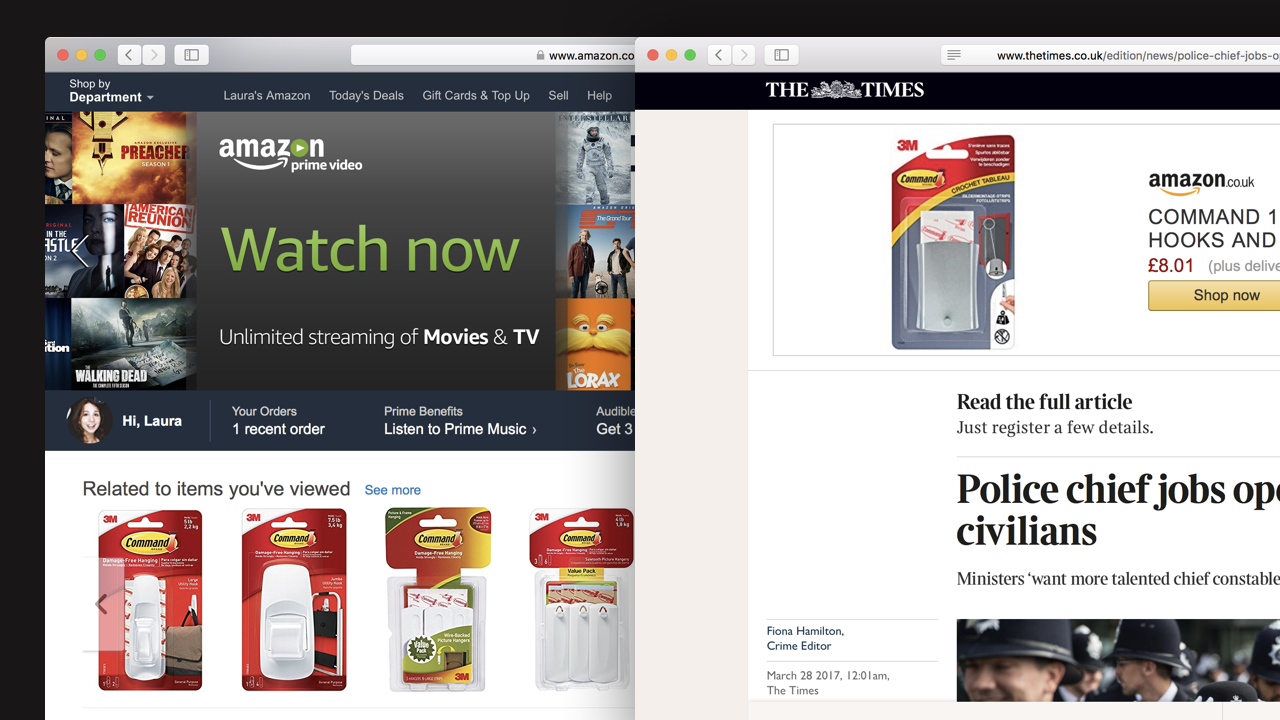

The problem is that adtech powers the ads that show alongside everything. Adtech is behind the pay-per-click ads that show you things that are relevant to the article or to you.

Adtech is the ads that follow you around the web.

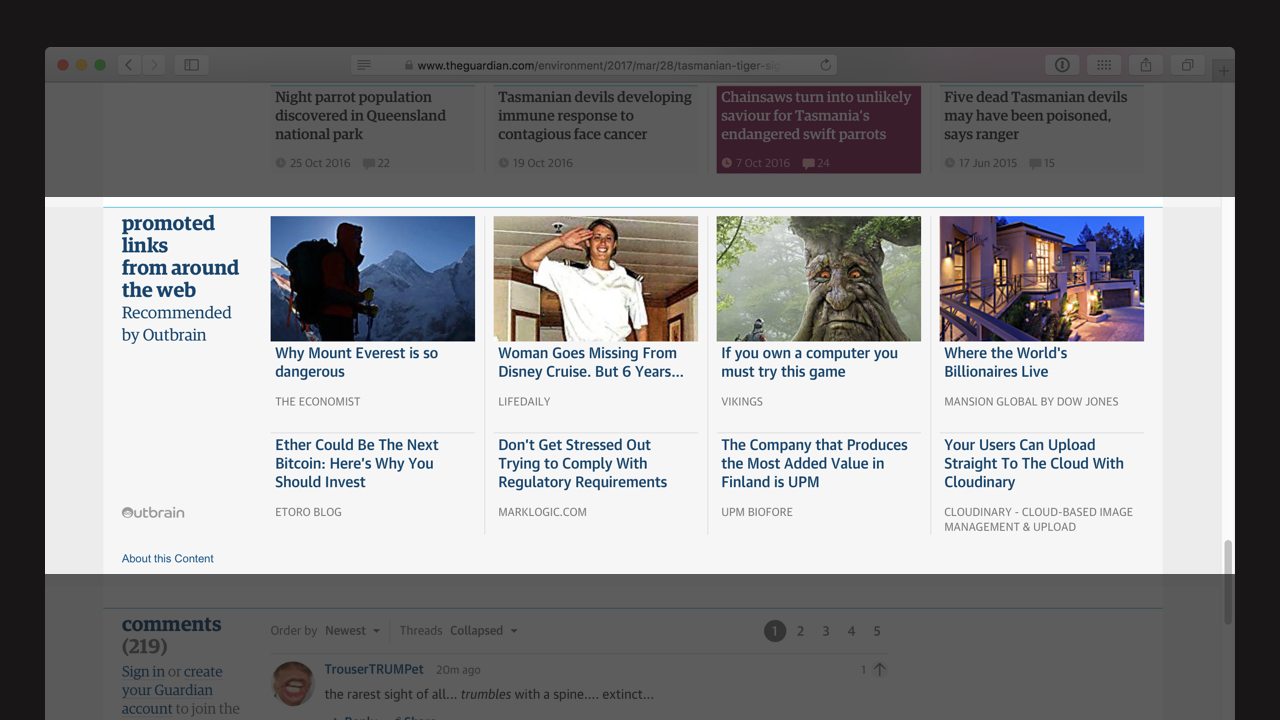

Adtech is the clickbait of intriguing stories that are sometimes dressed up as “Other articles you might like”, “Promoted links,” or “Sponsored content.”

- “1. It’s adtech that spies on people and violates their privacy.

- It’s adtech that’s full of fraud and a vector for malware.

- It’s adtech that incentivizes publications to prioritize “content generation” over journalism.

- It’s adtech that gives fake news a business model, because the fake is easier to produce than the real, and it pays just as well.

Wired talked to a Macedonian teenager about his lucrative business posting disinformatyza:

“He posted the link on Facebook, seeding it within various groups devoted to American politics; to his astonishment, it was shared around 800 times. That month—February 2016—Boris made more than $150 off the Google ads on his website. Considering this to be the best possible use of his time, he stopped going to high school.”

And on top of that, as Evgeny Morozov said in the Guardian:

“The problem is not fake news but the speed and ease of its dissemination, and it exists primarily because today’s digital capitalism makes it extremely profitable – look at Google and Facebook – to produce and circulate false but click-worthy narratives.”—Evgeny Morozov, Moral panic over fake news hides the real enemy – the digital giants, January 2017

But how do we solve this problem that is now at the core of journalism?

“Solving the problem of sensationalistic, click-driven journalism likely requires a new business model for news that focuses on its civic importance above profitability.”—Ethan Zuckerman, Fake news is a red herring, January 2017

Not-news is causing problems for the advertisers too, with some advertisers stopping using adtech platforms after their products appeared alongside undesirable and extreme content. But I’m not so sure this will make much of a difference to the pockets of the adtech makers. I wonder is this adtech business model too lucrative to change?

What can we do to block clickbait and fake news from our lives?

We need to think about what can we do now to block the clickbait and fake news from our lives? Assuming our friends aren’t forcing us on to it via social media…

Ad Blockers?

Could ad blockers be the answer? Many people have started blocking trackers using ad blockers. The key issue with this is that ads are not the problem. Trackers are the problem. The third-party tracking scripts are the problem.

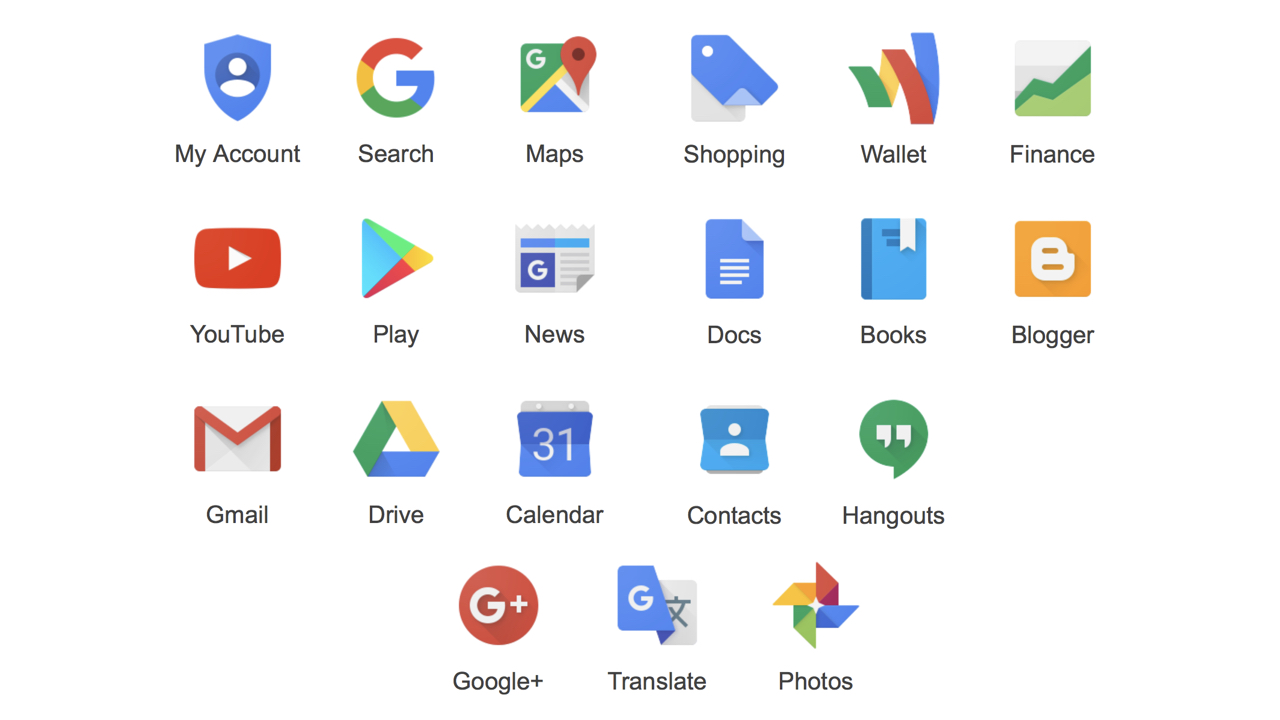

At Ind.ie, we’ve been doing research into trackers, and we found some of the most used third-party scripts on the web:

- google-analytics.com—64.1% of sites researched

- doubleclick.net—54.4% of sites researched

- google.com—41.9% of sites researched

- gstatic.com—32.8% of sites researched

- googleadservices.com—32.3% of sites researched

- facebook.com—29.0% of sites researched

- googlesyndication.com—26.9% of sites researched

- facebook.net—26.4% of sites researched

- google.se—23.0% of sites researched

So far, we’ve found around 78.5% of trackers from the top 10,000 sites come from Google. All of these sites set third-party cookies and/or tracking pixels.

Cookies themselves aren’t inherently problematic. First-party cookies can be useful in storing your username at logins or remembering your preferred language on a site. But we don’t want or need the bad third-party cookies that are just sitting there following us around and spying on everything we do.

Surveillance Capitalism

This is what Shoshana Zuboff coined as “Surveillance capitalism.” Also know as “corporate surveillance” or more simply, “people farming.”

Some people recognising this problem have started using ad blockers to block ads and third party scripts. As consumers of the web, we often use ad blockers, but as web builders, they can inconvenience us if they block what we’ve created.

When it comes to ad blockers, it bears repeating: ads are not the problem. Trackers are the problem. There are some horrifying ads and clickbait out there, but many of these blockers are not doing exactly what you think they are. For example, [Adblock Plus](https://adblockplus.org/en/acceptable-ads" rel=“nofollow) has a whitelist called Acceptable Ads which are ads and trackers that they just let through:

“we share a vision with the majority of our users that not all ads are equally annoying”—[Acceptable Ads criteria](https://adblockplus.org/en/acceptable-ads#criteria" rel=“nofollow)

Acceptable ads are largely included under the criteria that they’re not too dominant or annoying. But as I said before, annoying ads are not the problem. Trackers are the problem. And there’s nothing in the Acceptable Ads criteria about ads tracking you.

“we are being paid by some larger properties that serve non-intrusive advertisements that want to participate in the Acceptable Ads initiative”—[Acceptable Ads Agreements](https://adblockplus.org/acceptable-ads-agreements" rel=“nofollow)

What’s worse is that larger companies are paying for their ads to be shown. Including Google’s ads (and trackers). And we know how wide Google’s trackers reach… Worse still, now AdBlock Plus is even selling ads to replace the ones it blocks.

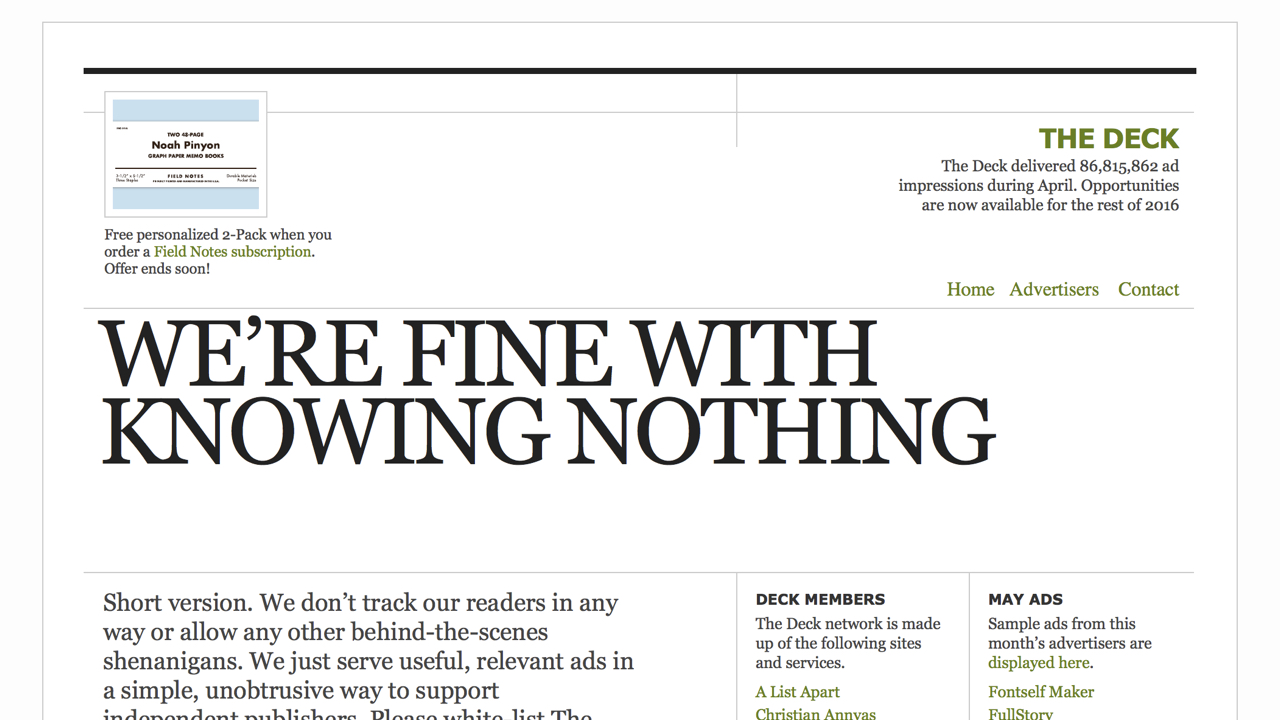

The anti-annoying-ad argument is distracting us from the real problems. Ad networks aren’t inherently bad. For example, The Deck (now sadly closed) was an ad network which targeted its audience by only being used on specialist sites. And they were fine with knowing nothing about the individuals who viewed those ads:

“We don’t track our readers in any way or allow any other behind-the-scenes shenanigans. We just serve useful, relevant ads in a simple, unobtrusive way to support independent publishers.”—The Deck Privacy Policy

Can we still have behavioural ads?

The benefit of The Deck was that it showed static ads to a niche audience, but what about behavioural ads? We could still show relevant ads based on behaviour. Advertising does not need to phone home to the cloud. You could still have behavioural advertising that keeps your information private by doing all the behavioural analysis and decision-making on the device itself. So it could work in theory, but who is going to use these systems when your personal information is so lucrative?

Analytics

There’s one third-party script that almost all of us put on our sites… analytics. Considering everything we’ve looked at so far, how can we use analytics more ethically?

Remember that stat from before, that 64.1% of the top 10,000 sites are using Google Analytics? How many of those sites do you visit? What has that told Google about you? How does that make you feel about using analytics on your own sites? Is it ethical or necessary to track visitors to our site? This isn’t a problem with a yes or no answer, but we need to consider these questions.

Ethics in technology

All of these issues I’ve discussed fall under the bracket of ethics. Ethics are defined as “a set of moral principles, especially ones relating to or affirming a specified group, field, or form of conduct.” So how do they relate to our everyday work in the web and technology industries?

We build the new everyday things

Us people building the web are building the new everyday things. We’re building the new infrastructure that powers our society. Much like other systems we use every day that we might pay taxes for: roads, clean water, waste removal, police, fire and rescue services.

How much time do you spend using a road every day? On the average day, I’ll cycle to the office, walk to the supermarket, that’s around 90 minutes using roads and paths. When the average person spends 2 hours 51 minutes of time per day on the internet, it becomes clear how much impact our work can have. And that’s why we need to take responsibility.

We need to take care of people using the new infrastructure we’re building. Having responsibility isn’t usually fun, but it is necessary. And this is why we need ethics, a kind of code of conduct for the people in our position of power of building the web. Mike Monteiro points out how hypocritical we can be…

“[W]hen other industries behave unethically we get upset. Yet, many of us seem to have no problem behaving unethically ourselves. We design databases for collecting information, without giving a second thought what that information will be used for.

As a community have we fallen to the level of debating the importance of ethics that’s usually reserved for politicians, bankers, hedge fund managers, pimps, and bookies?—Mike Monteiro, Ethics can’t be a side hustle, March 2017

Use your powers for good

We need designers and developers to use their powers for good.

This come with the understanding that code is not neutral, that whatever we build comes with our biases. We all have biases. And that code is political. I’m not saying that your code is politically aligned to a particular party, you do not need to have the same political affiliations as me to believe that your world outlook has an impact on the things you create.

What happens when someone only tests their algorithms on the other white people around them? Or when a programmer makes a security system that assumes there are no women doctors?

We’re not just building cool stuff for fun, we’re building things that affect other peoples’ lives.

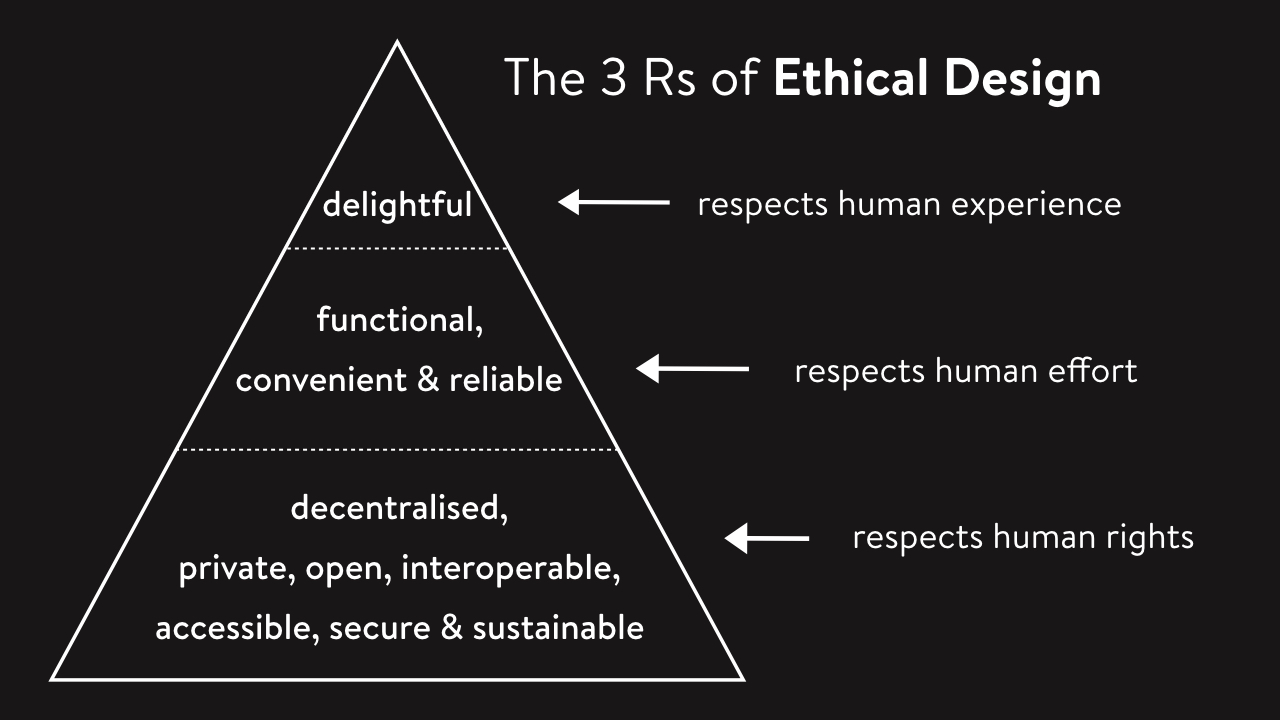

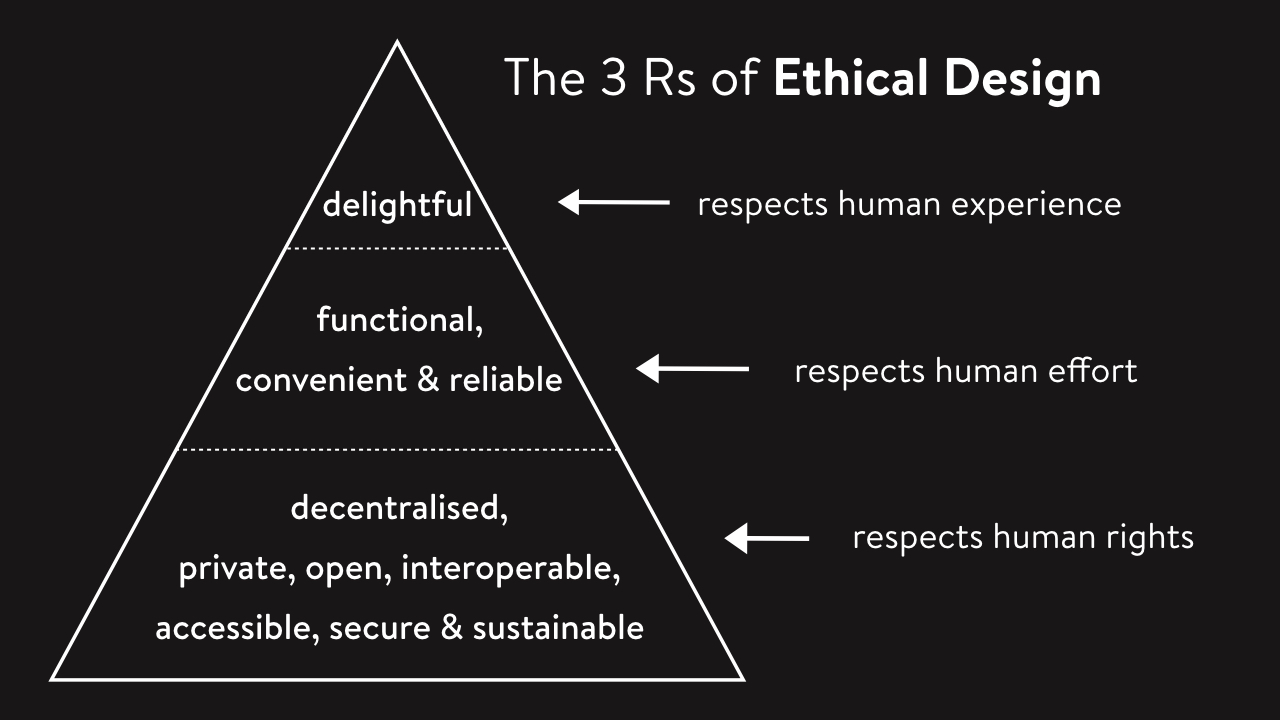

The Ethical Design Manifesto

At Ind.ie, we decided we need an Ethical Design Manifesto for the things we use every day:

We need to build products that are decentralised, private, open, interoperable, accessible, secure and sustainable. Because that means a product will respect human rights.

Decentralised

Decentralisation is something we talk about as developers as being a good thing because we don’t like to rely on centralised systems. It’s why we don’t hotlink all our images and scripts from other sites, and why nobody gets any work done when Github is down. We understand that if it’s not on our computers or servers, it’s not under our control. But for some reason we’re happy to give corporations control over all of our data.

With control over data comes power. After the Snowden revelations, people were rightly outraged by how much access our governments had to our browsing information, but not so many people were angry with the corporations who were collecting that information, and handing it right over to the governments. As Bruce Schneier said:

‘The NSA woke up and said “Corporations are spying on the Internet, let’s get ourselves a copy”’—Bruce Schneier

Private

We need to build products that are private. Privacy can come as a result of decentralisation: if your data is only on your device, it’s private to you.

Open

We need to build open products. “Free and open” or “open source” mean that anyone with sufficient technical knowledge can take the code from some software and make their own version. For free. When a product is free and open, it means you can trust it to some degree, because if one version goes in a direction you don’t like, you can use a different version. Though it’s worth noting that developers are much more of a position to do this than most people who use computers.

Interoperable

We need to build products that are interoperable. Back in the day, we had phones where you enter all your contacts in manually. It took ages, and then when you got a new phone, you’d have to enter your contacts in all over again. It was a frustrating experience. But using a SIM card to transfer the contacts across made it much easier because SIM cards are interoperable.

Another example of where we don’t have interoperability is in “walled gardens.” It is very common for corporations to want to lock you into their own beautiful ecosystem. That’s what can make it a nightmare switching between Apple and Android.

Accessible

We need to build products that are accessible. Accessibility is making something available to as many people as possible. To make our products more accessible we need to consider diversity, cost, and catering to a variety of needs that aren’t necessarily the same as our own. We must never stop looking for ways to make our work more accessible.

Secure

We need to build products that are secure. In the age of https on everything, we’re more clued up about security. However, security is also confused and conflated with privacy. For example, Google encrypted email and said “yay! all your stuff is now safe and private from hackers!” But that email is not encrypted and private from Google, it just protects your information in transit between you and Google.

Sustainable

We need to build products that are economically, environmentally and culturally sustainable. When a product is sustainable, you know it’s worth putting your time into using it. The product isn’t going to disappear tomorrow with all your information and hard work down the drain.

One of the key elements of sustainability is that a product has a viable long-term business model. (That doesn’t rely on selling your data!) If a startup has been given a huge amount of venture capital without having a business model, chances are they’re not sustainable, and it’s highly likely they’re going to rely on selling your data for profit.

Functional, convenient and reliable

When we’ve ensured our product respects human rights, we can work on making it functional, convenient and reliable. Doing so respects human effort, and these are generally the goals most of us are reaching for when we’re building things.

When a product is functional, convenient and reliable, it actually does the thing, it does the thing in a way that doesn’t get in your way, and it does that thing reliably again and again.

Delightful

Once our product respects human rights and human effort, we can layer the delight on top, to make our product delightful, respecting human experience.

Delightful isn’t just making products fun and fluffy. It’s about creating a genuinely great experience, which is reinforced because it’s built upon the strong foundation of respecting your effort and rights. Basecamp is a good example of this, where they have little touches of delight.

The Basecamp schedule when you haven’t had any past events yet

Basecamp can only get away with whimsical details because they already respect your human rights and effort. As Sara Wachter-Boettcher wrote in a fabulous article last year:

“Quit painting a thin layer of cuteness over fundamentally broken interfaces.”—Dear Tech, You Suck at Delight, Sara Wachter-Boettcher

Layering cuteness on top it is not a solution to a badly-built product, it’s just a way to make ourselves feel better about the problem.

At Ind.ie, we call Respecting human rights, Respecting human effort, and Respecting human experience the 3 Rs of Ethical Design.

Many modern products don’t respect our human rights. Instead they’re built on the backs of the humans that use them. These (often-Silicon Valley) products may respect human experience and human effort, but they take advantage of their users. They don’t give them privacy or security, interoperability or open technologies. Very rarely are their products accessible or sustainable.

But as an industry, we hail these products. We call them the “disruptors.” In the dictionary, “to disrupt” means “to interrupt (an event, activity, or process) by causing a disturbance or problem.” People making these products call themselves disruptors because they “disrupt” a market. They take down existing sustainable businesses and kill them off. They monopolise and they dump. We need to disrupt the disruptors. We need to disrupt their disruption.

We need to ask ourselves these questions

We need to ask ourselves these questions about what we build. Because we are the gatekeepers of what we create. We don’t have to add tracking to everything, it’s already gotten out of our control.

The business model of the organisations we work for is our business. If what we are building is harming people, we can’t blame someone else for that. We can’t defer responsibility for the products we build, that’s how bad things happen.

Some people say to me “but if I don’t like what Google/Facebook/Other Corporations are doing, surely I don’t have to use their services…?”

Nope. As I said before, social networks are part of society now. Email, booking services, ecommerce are our new everyday things. If I stopped using every site that is sharing my data with Google, I’d be using less than 25% of the web. And I couldn’t use email. We deserve to be able to take part in society, and use everyday things without our privacy being compromised.

Another thing I often hear is “what if I trust Google/Facebook/Other Corporation with my data?” Seriously people, Google isn’t your lover, you shouldn’t have to trust them. Why are we so loyal to faceless corporations?

We need to design and build systems that don’t need to be trusted. If it fits with the Ethical Design Manifesto, you don’t need to trust it, you own your own data, and you can easily go elsewhere if you’re no longer satisfied.

It’s important to realise that not worrying about your data is privilege. If you don’t worry about corporations (and by extension, governments) having access to your data, you are privileged. And quite possibly just foolish. What if you lived in a country where your sexual preference is illegal? What if you lived in a country that wants to deport people with your ethnic background? What if you lived in a country where your religious beliefs can get you killed? What if you needed a loan and your friends were considered too poor? What if you needed medical treatment but your medical insurance considered your habits made you too high risk?

Look at the world around us, any of these things could happen tomorrow. If you want to think about it in terms of how trackers affect you, you need to fear for your future self.

You also need to bear in mind that you’re not making these decisions for yourself. If you use Gmail, you are swapping free email for the data of everyone you exchange emails with. If you support your business with tracking, you are making that decision for your visitors. It’s invisible, and it’s opt-in by default. Is that fair?

As creators we need to make wise decisions on behalf of our visitors. We can be the gatekeepers of harmful decisions. If making these decisions becomes a battle you have to fight with the top of your organisation, there might just be something wrong with the business model. An organisation that makes its money by tracking people, whether they’re doing it themselves, or getting something in exchange for adding scripts to their site, is not going to want to change. They may even find it impossible to change.

As people become more aware of these issues, we need to be careful that if an organisation does appear to change, it’s not just superficial, that they’re not misdirecting us, or telling us something is better when it’s just bad in a different way. Like when WhatsApp switched on end-to-end encryption for hundreds of millions of users. So many people said “Yay! They’re the good guys!” But as Rick Falkvinge said on his blog…

“Would Facebook really allow WhatsApp to throw away the business value in a 19-billion acquisition? Of course it wouldn’t. This demonstrates that the snoop value was in the metadata all along: the knowledge of who talks to whom, when, how, and how often. Not in the actual words communicated.”—Rick Falkvinge

And surprise! WhatsApp is now sharing user data with Facebook.

And remember when Google’s Allo was heralded for its privacy features? Turns out, that was just a bit of announcement PR.

You can learn about the value of metadata by watching the fantastic documentary, A Good American. In this film, Bill Binney, working on surveillance for the US, says that meta information is the most valuable information that the NSA can collect. Without meta data, surveilling people is like looking for a needle in a haystack. (I also recommend the recent Oliver Stone Snowden film for learning about how corporate surveillance and government surveillance come together.)

As we become more aware of the problems, the people behind the trackers are getting defensive. Massive German publisher Axel Springer says their “core business is delivering ads to its visitors. Journalistic content is just a vehicle to get readers to view the ads”. According to Lindsay Rowntree, who writes for a marketing and advertising blog…

“The web, as it is today, exists because of an implicit understanding that ads pay for the content consumers love and, therefore, need to be seen.”

—Lindsay Rowntree, Why Three’s Partnership with Shine…, May 2016

Again. No. This is just not true. Ads are the most common business model, but not the only one. Do you want to get all your news from people who just want your eyeballs? And remember, ads are still not the problem. Trackers are the problem.

Corporations need to stop treating us like we’re greedy lab rats, making outrageous demands just by asking “please don’t experiment on us.”

We must question and challenge these unethical practices. And we need to learn to see past the PR. We certainly shouldn’t engage in this PR ourselves. We need to make real things that have meaning, rather than the illusion of having meaning. Don’t be the person shaving a few kilobytes off an image file when someone else is adding 5MB of trackers.

We should also be selfish. Building ethical alternatives to centralised technologies will save our jobs. The average Facebook user spends 50 minutes on Facebook a day. Facebook’s functionality has replaced status updates, photo sharing, company sites, news, and chat, to name just a few.

When it comes to our business use, what Facebook hasn’t got covered, Google has a product for you. If we want jobs that aren’t at Facebook or Google, we’re going to need to make sure the rest of the web actually exists.

Build and support alternatives

We need to build alternatives, giving ourselves the choice to choose a different way, as both the consumers and the builders of the web. And when we find alternatives, we must support them.

When you’re looking for replacements for Facebook and Google products, don’t just look for another behemoth. You can’t replace Google in its entirety, but you can use individual products/services to replace different parts of its functionality. Perhaps DuckDuckGo for search, OpenStreetMap for maps, WordPress for blogging, Fastmail for email. The benefit of using individual products is that no one organisation has all your information.

It’s also worth looking for organisations that have traditional business models. Business models where you pay for a product or service in small one-off or recurring fees. With larger organisations, that’s no guarantee that they won’t track you, but small-to-medium businesses are less likely to take your money and track you too.

It’s also vitally important that we call out bad behaviour and make tracking socially unacceptable. Call out businesses you see tracking you, especially if you also pay them.

Work for more ethical companies

We can’t all just quit our jobs to go work somewhere more ethical, but maybe next time you’re looking for work, do your research on their business models. Help make a difference by putting your valuable knowledge and skills into building the alternatives, and help us build these bridges from the mainstream technology to the future we want to see.

Build the world you want to live in.

Laura, thanks for posting this — must have been an excellent talk, going by this brilliant write-up. Thank you :)

Excellent…. You hit on some fantastic points that more people need to be aware of. It’s definitely an uphill battle making changes and developing or finding products that can adhere to an ethical business model (I’m changing gears in my company to spend a lot more time working on solutions) but it must be done. To continue along the path that the large corporations have laid out for us “users” will eventually end with no privacy, control, or ownership of our information and ultimately our identities… The writing is on the wall for anyone who takes the time to look, it’s up to us to erase that writing.

Dear Laura,

wonderful article with a lot of information. We’ve been following you closely and even use Better on our Macs. When we started developing our Travel/Digital Nomad blog last year, we thought hard about how to make it sustainable without handing data over to big corporations. Google Analytics was never an option so we did some research and found stetic, a German alternative, that is not free. Still, we bought a one year license, eating up the costs and protecting our visitors while still getting useful data to improve our website.

What that shows is, that you have to invest — nothing is for free these days. Sadly, most people prefer a free service over a paid one.